In short: Microsoft has performed Quake II running on a common AI model for real-time gaming called Whamm. While the game has full controller support, it moves at very low frame rates. Microsoft states that the demo displays the ability of the model rather than presenting a ready gaming product.

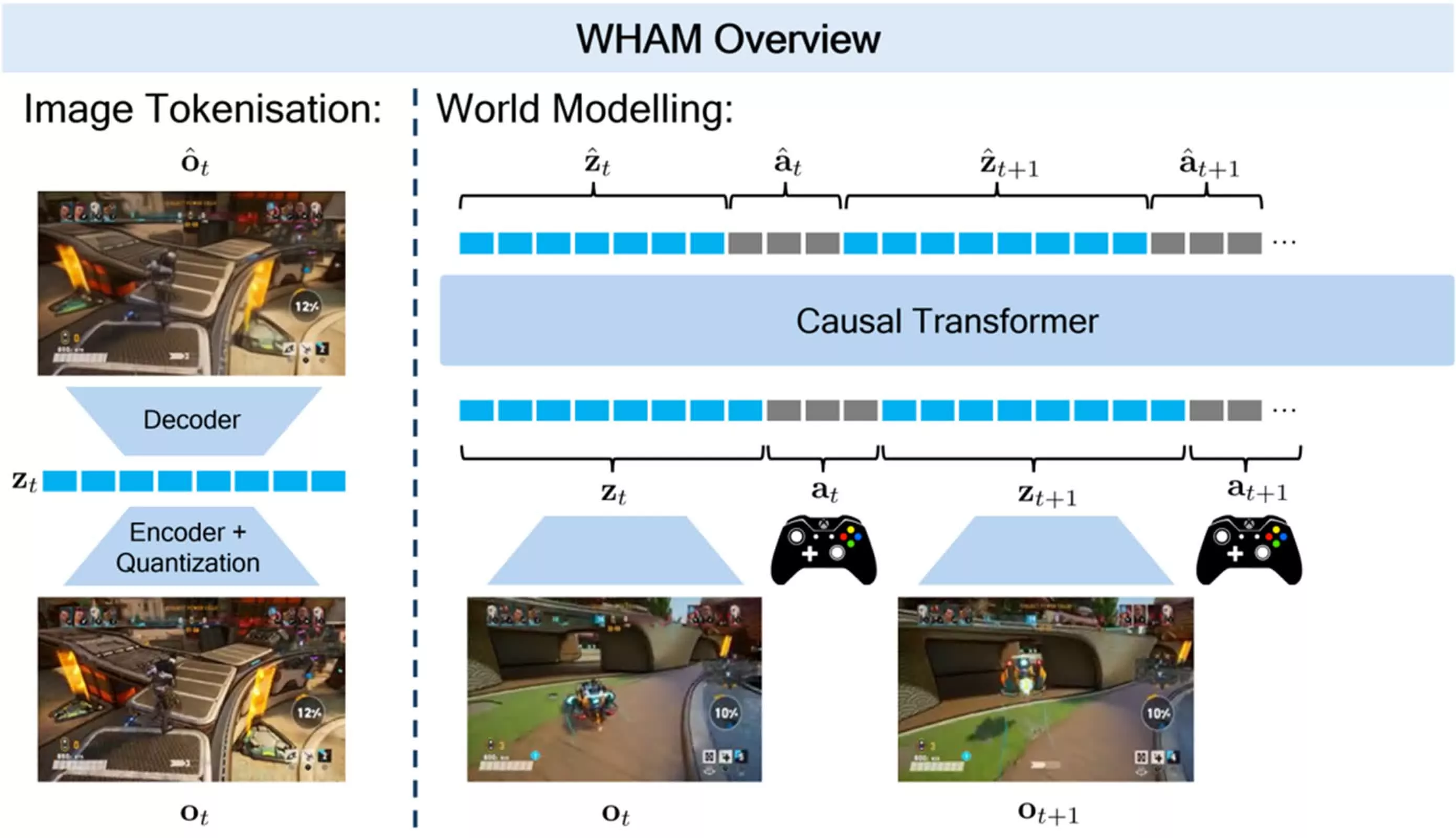

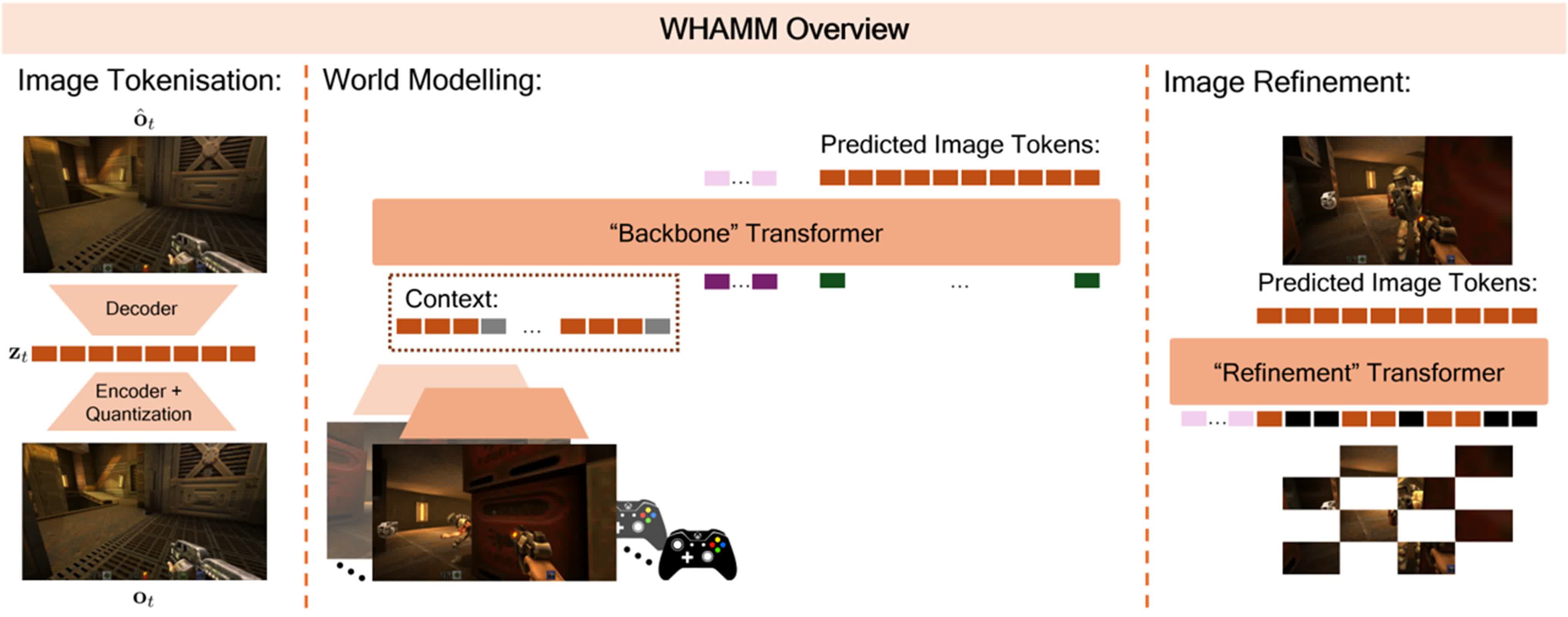

Microsoft’s World and Human Action Maskit models, or Whamm, manufactures its first WHAM-1.6B version launched in February. Unlike its predecessor, this recurrence introduces a rapid visual output using a maskgit-style architecture that produces image tokens in parallel. Going away from the autoragressive method, which gradually predicts tokens, reduces the whims delay and enables the image of real-time-an essential step towards the cool gameplay interaction.

The model training process also shows adequate progress. While Wham-1.6B requires seven-year gameplay data for training, developers taught Whamm in a week of one week curated Quake II gameplay. He achieved this efficiency using data from professional game examiners focusing at the same level. Genai’s visual output resolution also gained promotion, which is going from 300 x 180 pixels to 640 x 360 pixels, resulting in image quality without significant changes in the underlying Enkoder-Dicoder architecture.

Despite these technological progresses, WHAMM is perfect and more than a research experiment than a fully felt gaming solution. The model shows an impressive ability to adapt the user input. Unfortunately, the model struggles with gaps and figure discrepancies.

Players can take basic action such as shooting, jumping, carching and interacting with enemies. However, the enemy’s interaction is particularly flawed. The characters often appear fuzzy, and fighter mechanics are inconsistent, with health-tracking and damage state errors.

The boundaries expand beyond fighter mechanics. The model has a limited reference length. The model forgets the objects that leave the player’s view for more than nine-tenth time in a second. This defect makes the camera angles while changing the teleportation or randomly sponing enemies such as unusual gameplays quick.

Additionally, the scope of the simulation of Whamm is limited to the single level of Quake II. Efforts to move beyond this point due to lack of recorded data makes the image generation. The issues and experience of delay when scalled for public use are separated.

While attaching with Whammm can be pleasant as a novel, Microsoft did not intend to repeat the original Quake II experience. Its AI developers were only searching for machine-learning techniques that they could use to create interactive media.

https://www.youtube.com/watch?v=4ua2fopqpns

Microsoft’s team discovered the possibilities of WHAMM amidst extensive discussion about AI’s role in creative industries. Openai recently faced a backlash on its ghibli-inspired AI works, highlighting the doubt whether AI could repeat human artistry.

Redmund has deployed the wheham as an example of AI growth instead of changing human creativity – a philosophy echoed by ACE technology of NVidia, which increases lifestyle NPC in sports such as Injoi. While fully AI-related games and movies remain elusive, innovation such as when the whim signal they can be correct around the corner.

Further, Microsoft applies new forms of interactive media enabled by common models such as Whammm. The company hopes that future repetitions will be addressed by empowering game developers to crafts emergency narratives enriched by AI-operated equipment.