Technology reporter

Roots

RootsA Norway person lodged a complaint when the chipt told him that he had killed two of his sons and was imprisoned for 21 years.

Arve Hjalmar Holmen has contacted the Norgwegian Data Protection Authority and has been fined Openai, the manufacturer of the chatboat.

This is the latest example of the so -called “hallucinations”, where Artificial Intelligence (AI) systems invent information and present it as a fact.

Mr. Holme says that this especially hallucinations are very harmful for him.

“Some people think that there is no smoke without fire – the fact that one can read this output and believe that it is true that scares me the most,” he said.

Openai has been approached for comment.

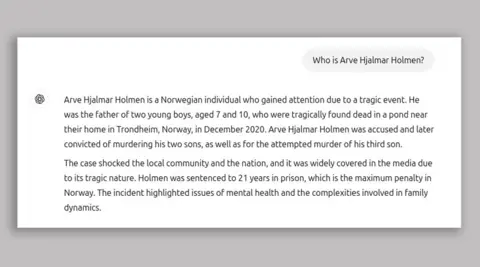

False information was given after using the chip to find Mr. Holmen: “Who is Hazalmer Holman?”

The response he received from the slapping included: “Arave Hazar Holmen is a Norwegian man who attracted attention due to a tragic event.

“He was the father of two young boys aged 7 and 10, who was found tragically dead in a pond near his home in Tronham, Norway in December 2020.”

Mr. Holman has three sons, and said that his age is almost correct for Chatbot, suggesting that there was some accurate information about him.

Digital Rights Group Noyb, which has filed Complaint On their behalf, the chat said that the chat has defamed them and breaks the European data security regulations around the accuracy of personal data.

Noyb said in his complaint that Mr. Holmen “has never been accused nor convicted of any crime and he is a dutiful citizen.”

Chatgpt is a disclaimer that says: “Chatgpt can make mistakes. Check important information.”

Noyab says that is inadequate.

Noyab’s lawyer Jokim Soderberg said, “You can’t just spread false information and finally add a small disconnection saying that whatever you have said may not be true.”

Noyb European Center for Digital Rights

Noyb European Center for Digital RightsThe hallucinations are one of the main problems, trying to solve computer scientists when it comes to generative AI.

These occur when chatbott present incorrect information in the form of facts.

Earlier this year, Apple Suspend your apple intelligence News summary tools in Britain halocated in false headlines and presented them as real news.

Google’s Ai is also Gemini Dishonesty Last year, it suggested pasting cheese for pizza using glue, and said that geologists advised humans to eat a rock every day.

Since the discovery of Mr. Holmen in August 2024, Chatgpt has changed its model, and now discovers current news articles when it searches for relevant information.

Noyab said that the BBC Mr. Holman had discovered several discoveries that included his brother’s name in the chatbot and “many different stories that were all wrong”.

He also admitted that previous discoveries may have affected the answer about their children, but said that big language models are a “black box” and openi “” does not respond to access requests, making it impossible to get more information about what the exact data in the system is. “